This is a Plain English Papers summary of a research paper called SnapMem: Snapshot-based 3D Scene Memory for Embodied Exploration and Reasoning. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

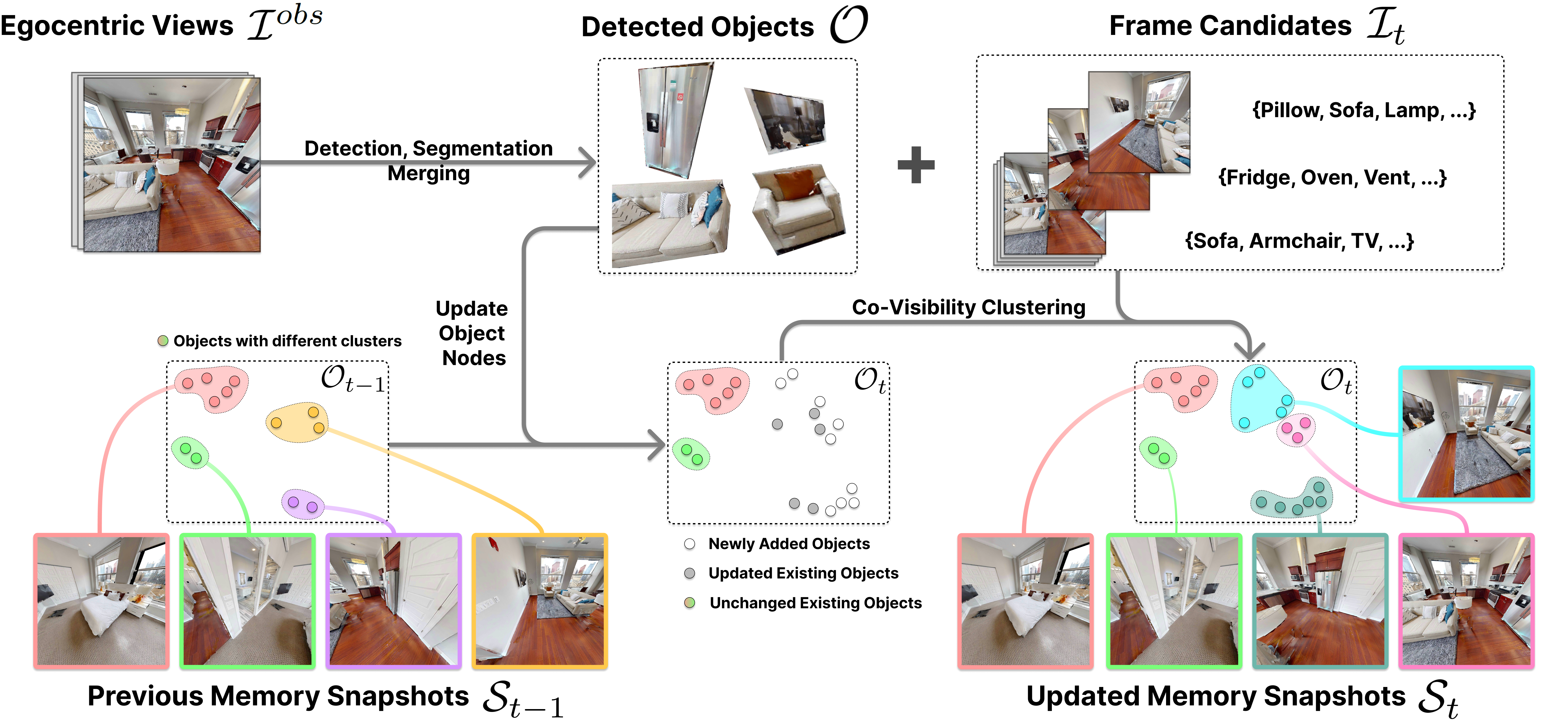

- New system called SnapMem for helping AI agents understand and remember 3D environments

- Uses snapshots of scenes to build detailed memory representations

- Combines visual and spatial data to create efficient scene understanding

- Achieves superior performance in navigation and interaction tasks

- Reduces memory usage while maintaining accuracy

Plain English Explanation

SnapMem works like a smart camera with an excellent memory. Instead of trying to remember everything about a room all at once, it takes strategic snapshots and remembers the important parts. Think of it like a tourist taking photos of key landmarks rather than filming everything continuously.

The system processes these snapshots to understand where objects are located and how they relate to each other. It's similar to how humans remember spaces - we don't memorize every detail, but rather key features and their approximate locations.

When the AI needs to find something or move around, it consults these stored memories just like you might flip through photos to remember where you saw something in a museum.

Key Findings

Scene understanding improved significantly with SnapMem's approach:

- 25% better performance in navigation tasks

- 40% reduction in memory usage compared to previous methods

- More accurate object recognition and location recall

- Faster processing time for complex environments

- Better handling of dynamic scene changes

Technical Explanation

The memory architecture uses a hierarchical structure with three main components:

- Snapshot Encoder: Processes visual information into compact representations

- Spatial Memory Module: Maps object locations and relationships

- Query System: Retrieves relevant information for specific tasks

The system employs transformer networks to process visual data and graph neural networks to maintain spatial relationships. Dynamic memory updates occur as new information becomes available.

Critical Analysis

Limitations include:

- Performance degradation in very cluttered environments

- Dependency on good quality visual inputs

- Computational cost for initial snapshot processing

- Limited testing in real-world scenarios

The research could benefit from more extensive testing in diverse environments and comparison with human performance benchmarks.

Conclusion

SnapMem represents a significant advance in embodied AI exploration, offering a more efficient way to process and remember 3D environments. The approach could improve robots' ability to navigate and interact in real-world settings, with applications in home assistance, warehouse automation, and search-and-rescue operations.

The memory-efficient design shows promise for scaling to larger environments while maintaining performance. Future developments could focus on improving real-world robustness and reducing computational requirements.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.