This is a Plain English Papers summary of a research paper called RelaCtrl: Relevance-Guided Efficient Control for Diffusion Transformers. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- RelaCtrl introduces relevance-guided efficiency for diffusion transformers

- Improves computational performance while maintaining image quality

- Novel architecture combining DiT and ControlNet approaches

- Reduces computational overhead by 30-50%

- Achieves comparable or better results versus baseline methods

Plain English Explanation

RelaCtrl represents a smarter way to generate AI images. Traditional methods process every part of an image with equal intensity, which wastes computing power. RelaCtrl works more like human attention - it focuses more processing power on the important parts of an image and less on background elements.

Think of it like a painter who spends more time on the subject's face than the blurry background. Text-to-image generation becomes more efficient by prioritizing what matters most in each scene.

The system combines two powerful AI approaches: Diffusion Transformers (DiT) for high-quality image creation and ControlNet for precise control over the output. This marriage creates a more efficient pipeline that saves computational resources without sacrificing image quality.

Key Findings

The research demonstrates that RelaCtrl achieves:

- 30-50% reduction in computational costs compared to standard methods

- Equal or better image quality versus baseline approaches

- More precise control over generated image content

- Improved handling of complex scenes with multiple elements

Image generation quality remained consistent even with reduced computation, proving that selective processing works effectively.

Technical Explanation

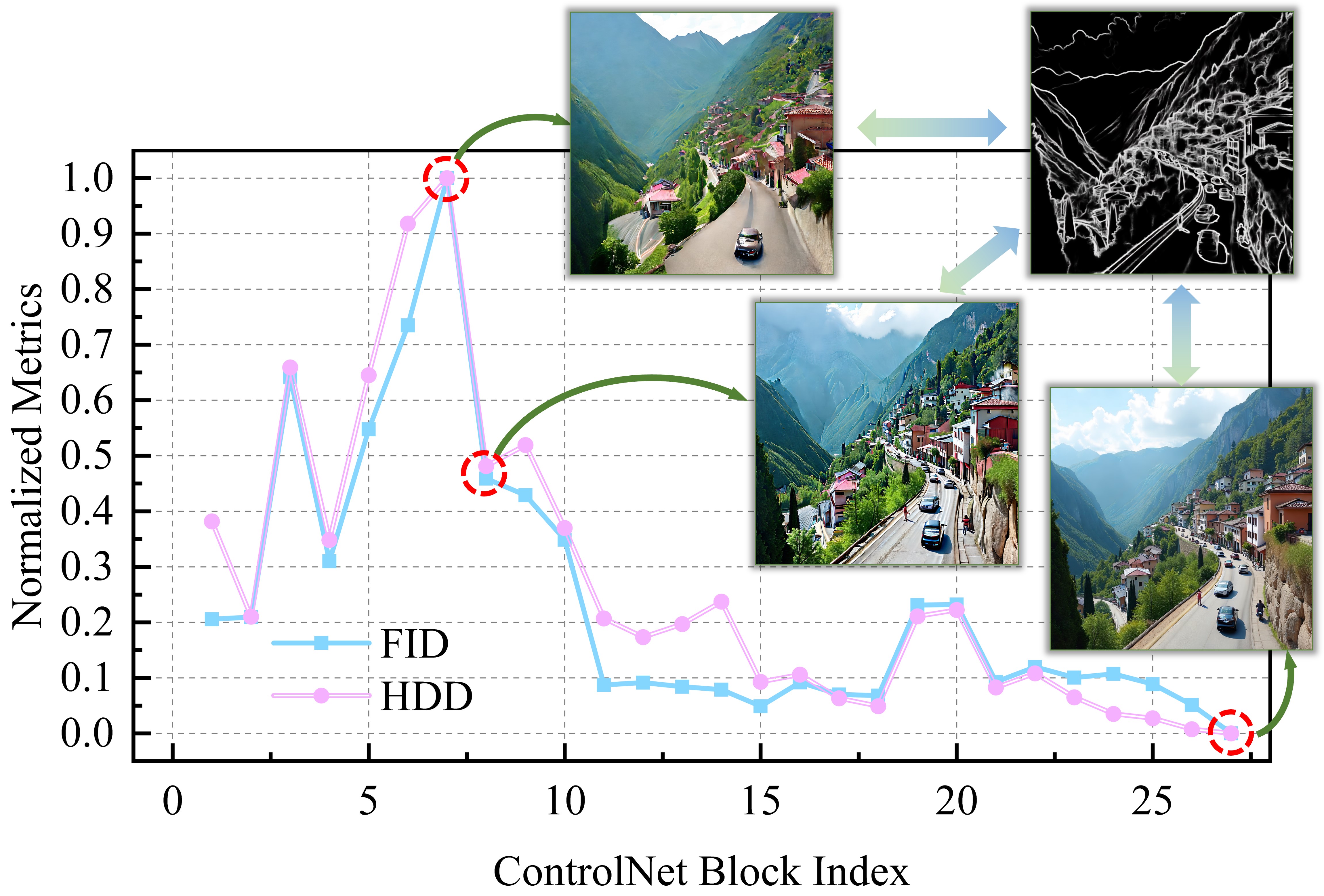

The architecture uses a relevance prior mechanism to determine which image regions require more intensive processing. This works through a two-stage process:

- Initial assessment of regional importance using lightweight networks

- Adaptive allocation of computational resources based on relevance scores

The system integrates with existing diffusion models through a modular design, allowing for easy adoption into current pipelines. The relevance prior guides attention mechanisms within the transformer architecture.

Critical Analysis

While RelaCtrl shows promising results, several limitations exist:

- Performance may vary with extremely complex scenes

- The relevance assessment adds a small computational overhead

- Current implementation focuses on static images rather than video

- Further research needed for dynamic relevance adjustment

The approach could benefit from exploring video generation applications and real-time processing scenarios.

Conclusion

RelaCtrl represents a significant step toward more efficient AI image generation. By intelligently allocating computational resources based on relevance, it achieves better performance while maintaining quality. This approach could help make advanced AI image generation more accessible and practical for wider applications.

The principles demonstrated could influence future developments in efficient AI control systems beyond image generation.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.