This is a Plain English Papers summary of a research paper called Long Context vs. RAG for LLMs: An Evaluation and Revisits. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

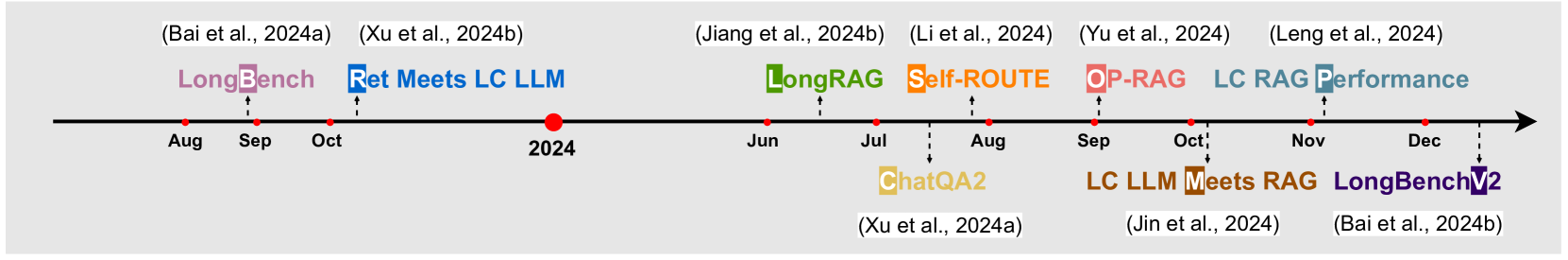

- Research comparing effectiveness of long context LLMs vs Retrieval-Augmented Generation (RAG)

- Analysis of performance across information retrieval and question answering tasks

- Examination of strengths and limitations of each approach

- Investigation of potential hybrid solutions combining both methods

- Assessment of computational costs and practical implementation considerations

Plain English Explanation

Long context LLMs and RAG represent two different ways to help AI systems work with large amounts of information. Think of long context LLMs as speed readers who can take in huge amounts of text at once, while RAG systems work more like librarians who find and fetch specific relevant information.

The research explores which approach works better for different tasks. Long context models excel at understanding complex relationships across large texts but require significant computing power. RAG systems are more efficient and can access larger knowledge bases, but may miss subtle connections between pieces of information.

Key Findings

The study revealed that RAG systems generally performed better for fact-based questions and specific information retrieval. Long context models showed superior performance in tasks requiring deep understanding of relationships between different parts of text.

Key performance metrics showed:

- RAG systems were more computationally efficient

- Long context models provided more coherent responses

- Hybrid approaches combining both methods showed promise

- Cost-effectiveness favored RAG for most practical applications

Technical Explanation

The research implemented a systematic comparison using standardized benchmarks. The evaluation framework tested both approaches across multiple dimensions including accuracy, latency, and resource utilization.

The technical architecture involved testing various context window sizes for long context models and different retrieval mechanisms for RAG systems. The study employed multiple evaluation metrics including ROUGE scores, human evaluation, and computational efficiency measurements.

Critical Analysis

Several limitations deserve consideration:

- Limited testing across different types of content

- Potential bias in retrieval mechanism selection

- Computational resource constraints affecting test scope

Further research could explore more sophisticated hybrid approaches and investigate performance across a broader range of use cases. The study's focus on English-language content may not fully represent multilingual applications.

Conclusion

The research demonstrates that neither approach definitively outperforms the other across all scenarios. The optimal choice depends on specific use case requirements, available computational resources, and the nature of the information being processed. Future developments will likely focus on creating more efficient hybrid systems that combine the strengths of both approaches.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.