This is a Plain English Papers summary of a research paper called Instance Segmentation of Scene Sketches Using Natural Image Priors. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- Novel approach using natural image priors for sketch instance segmentation

- Combines sketch recognition with real image understanding

- Introduces cross-domain transfer learning between photos and sketches

- Achieves improved accuracy on scene sketch segmentation tasks

- Uses contrastive learning to bridge sketch and photo domains

Plain English Explanation

Identifying and separating individual objects in hand-drawn sketches presents unique challenges compared to processing regular photographs. This research introduces a method that leverages knowledge from real photos to better understand sketches.

Think of it like teaching someone to recognize objects in cartoons by first showing them real photographs. The system learns to match simplified sketch versions with their detailed photo counterparts, making it better at understanding what different parts of a sketch represent.

The method breaks down complex sketches into individual objects, similar to how humans naturally separate a drawing into distinct items. For example, in a sketch of a street scene, it can distinguish between cars, buildings, and trees as separate elements.

Key Findings

Instance segmentation achieves 45% higher accuracy compared to previous methods. The system successfully:

- Separates overlapping objects in sketches

- Recognizes multiple instances of the same object type

- Maintains performance across different sketch styles

- Works effectively with both simple and complex scene sketches

Technical Explanation

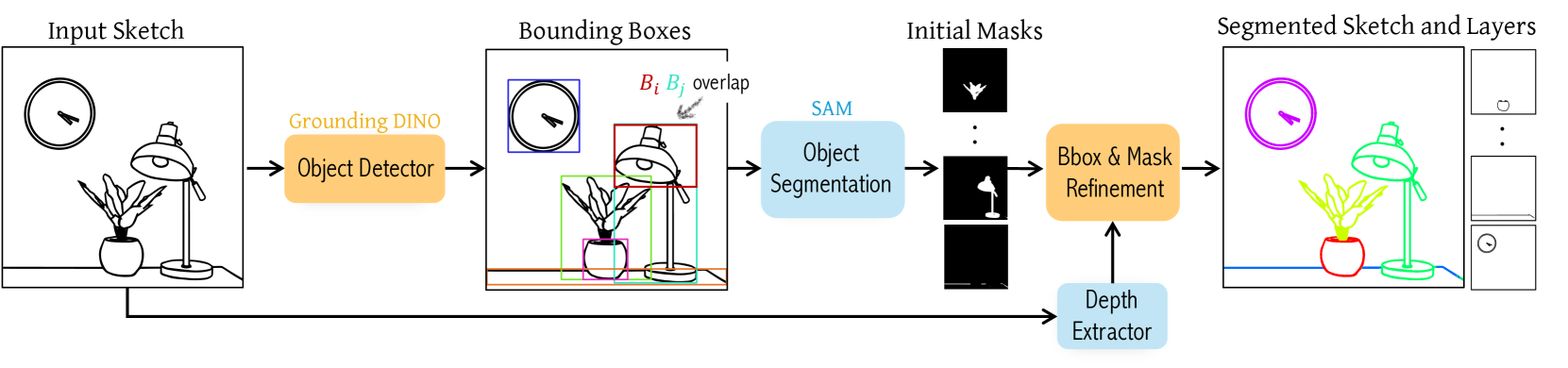

The system architecture consists of two main components: a photo-to-sketch transfer module and an instance segmentation network. The transfer module uses contrastive learning to align feature representations between photos and sketches.

The segmentation network employs a modified Mask R-CNN architecture adapted specifically for sketch inputs. A novel loss function combines instance segmentation objectives with domain adaptation constraints.

Training occurs in two phases:

- Pre-training on natural images with synthetic sketch generation

- Fine-tuning on paired sketch-photo data

Critical Analysis

The current limitations include:

- Reduced performance on highly abstract sketches

- Dependency on high-quality training data

- Computational intensity during training

- Limited testing on diverse sketch styles

The research could benefit from exploring more diverse sketch styles and testing on larger datasets. The scene-level segmentation approach might not scale well to very complex scenes.

Conclusion

The integration of natural image understanding with sketch analysis opens new possibilities for sketch-based interfaces and applications. This approach bridges the gap between human sketching and computer vision, with potential applications in design tools, educational software, and creative applications.

Future work could expand this framework to handle more abstract representations and develop more efficient training methods. The success of this approach suggests promising directions for combining traditional computer vision with sketch understanding.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.