How to improve your semantic search with hypothetical document embeddings

How to use a simple LLM call to dramatically improve the quality of your semantic search results

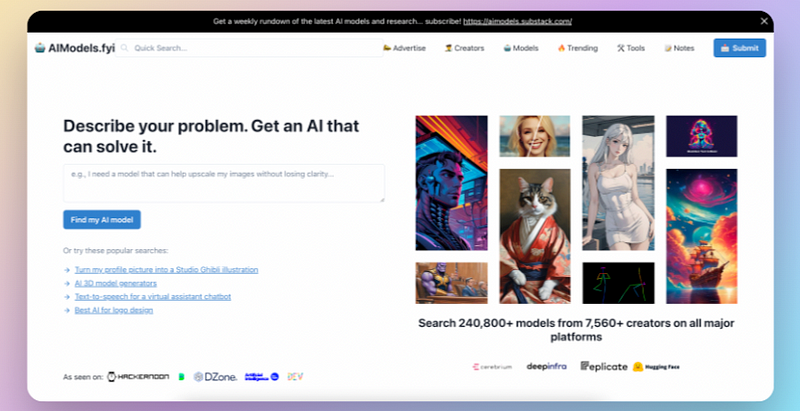

Finding the right AI model to build a workflow around is hard. With so many models available across different platforms, it’s impossible to know where to start or how to find the one that best fits your specific needs. That’s the problem I set out to solve with AIModels.fyi, the only search engine for discovering and comparing AI models from across the web.

The AIModels.fyi homepage — describe your problem, get an AI model that can solve it.

The site has a simple premise: describe your problem in natural language, and get back a list of AI models that can potentially solve it. For example, you might search for “I need a model that can help upscale my images without losing clarity” or “make my cat look like it’s singing”. The goal is to make it easy for anyone, not just ML engineers, to find useful AI tools for their projects.

AIModels.fyi is a reader-supported publication. To receive new posts and support my work subscribe and be sure to follow me on Twitter!

Under the hood, the search works by taking the user’s query and comparing it to a database of over 240,000 AI models from 7,500+ creators across major platforms like Hugging Face, Replicate, Cerebrium, and DeepInfra. When a user types in a search, the query gets embedded into a vector using OpenAI’s text-embedding-ada-002 model. This query vector is then compared to pre-computed vector representations of the model names, descriptions, and use cases in the database. The most similar models by cosine distance are returned as the search results.

Here’s a simplified version of the core search logic I’ve been using for about six months:

const fetchData = async (query) => {

// Embed the search query

const embeddingResponse = await openAi.createEmbedding({

model: "text-embedding-ada-002",

input: query,

});

const embedding = embeddingResponse.data.data[0].embedding;

// Find the most similar models in the vector database

const { data: modelsData, error } = await supabase.rpc("search_models", {

query_embedding: embedding,

similarity_threshold: 0.75,

match_count: 10,

});

return modelsData;

};

This vector search approach works reasonably well for surfacing relevant models when there’s direct overlap between the query terms and the model descriptions. But it struggles with more abstract, complex or niche queries where the user’s language doesn’t exactly match the technical terminology in the database.

For example, I recently wanted to find a model that could animate a portrait image using an audio voice clip to create a talking head effect. I knew there were some good models out there for this, but my searches for things like “talking portrait from image and audio” weren’t turning up the results I expected. The problem was that my query terms didn’t have enough literal matches with the specific words used in the model descriptions, even though the semantic intent was similar.

This got me thinking about ways to improve the search experience to better handle those kinds of abstract or complex queries. How could I make the system smarter at understanding the user’s true intent and mapping it to relevant models in the database? That’s when I came across the idea of Hypothetical Document Embeddings (HyDE) from this research paper after reading about it in this Medium post.

Meet Mr. HyDE — The query expander!

Let’s turn a tiny little search phrase into a rubust query! Photo by Wesley Tingey on Unsplash

The key insight behind HyDE is to use a large language model (LLM) to expand the user’s short query into a more detailed hypothetical “ideal” document before embedding it for search. So instead of directly matching the query embedding to the database embeddings, it first translates the query into a richer representation in the same semantic space as the real documents.

Here’s how the process works:

The user enters a natural language query expressing their needs, like “I want to convert an image into a 3D model.”

This generated pseudo-document is then embedded into a dense vector using a model like OpenAI’s

text-embedding-ada-002.The resulting embedding is used to query the vector database and find the most similar real model documents.

The query is fed into an instruction-tuned LLM like GPT-3 to generate a hypothetical document. The prompt asks the model to imagine an ideal document for answering the query. For example:

Query: I want to convert an image into a 3D model

Hypothetical Document: There are several AI models available that can convert a 2D image into a 3D model. The process is known as single-view reconstruction or 3D estimation. It typically involves using deep learning to predict the depth map and 3D shape from the 2D input image. Some popular models for this task include Pix2Vox, Pixel2Mesh, AtlasNet, and OccNet. These models are trained on large datasets of 3D objects and can handle complex shapes. To use them, you provide an input image and the model will output a 3D mesh or voxel representation that you can render or edit further in 3D modeling tools…

The LLM step helps bridge the gap between the user’s simple query and the more technical language used in the model descriptions. It expands on the initial query to infer additional relevant terms, concepts, and context that can help retrieve better matches. At the same time, using the real document embeddings as the final search index keeps the results grounded and prevents hallucination.

I implemented a basic version of HyDE on top of the existing AIModels search and the initial results are promising. In some sample queries I tested, HyDE surfaced two to three times more relevant models that the original keyword-matching approach missed. For the talking head portrait query, it was able to generate a hypothetical document with key terms like “lip sync”, “facial animation”, and “audio-driven animation”, which helped retrieve some strong model matches that the plain search had overlooked.

How to implement HyDE in your own search system

Let me show you how HyDE can be added in on top of an existing semantic search system to dramatically improve its quality.

Let’s start with a simplified code sketch based on my current search flow. Here’s the full file — you can glance through it in totality before we look at the individual parts:

const fetchData = async (query) => {

// Generate a hypothetical document embedding for the query

const hypotheticalDocument = await generateHypotheticalDocument(query);

const embeddingResponse = await openAi.createEmbedding({

model: "text-embedding-ada-002",

input: hypotheticalDocument,

});

const embedding = embeddingResponse.data.data[0].embedding;

// Search the real model embeddings using the hypothetical document embedding

const { data: modelsData, error } = await supabase.rpc("search_models", {

query_embedding: embedding,

similarity_threshold: 0.75,

match_count: 10,

});

return modelsData;

};

const generateHypotheticalDocument = async (query) => {

// Use GPT-3 to expand the query into a hypothetical ideal document

const prompt = `The user entered the following search query:

"${query}"

Please generate a detailed hypothetical document that would be highly relevant to answering this query. The document should use more technical language and expand on the key aspects of the query.

Hypothetical Document:`;

const response = await openAi.createCompletion({

model: "text-davinci-002",

prompt: prompt,

max_tokens: 200,

n: 1,

stop: null,

temperature: 0.5,

});

const hypotheticalDocument = response.data.choices[0].text.trim();

return hypotheticalDocument;

}

Let’s see how it works. We’ll start with the fetchData function, which is the main entry point for the search process. First up:

const hypotheticalDocument = await generateHypotheticalDocument(query);

This line calls the generateHypotheticalDocument function (which we'll explain in more detail later) to generate a hypothetical ideal document based on the user's search query.

The hypothetical document is a key part of the HyDE approach, as it aims to capture the user’s search intent in a more detailed and technical way than the original query.

const embeddingResponse = await openAi.createEmbedding({

model: "text-embedding-ada-002",

input: hypotheticalDocument,

});

const embedding = embeddingResponse.data.data[0].embedding;

These lines use OpenAI’s text-embedding-ada-002 model to generate an embedding vector for the hypothetical document. I think it's the best embedding option out there so far.

Embeddings are a way to represent text as a dense vector of numbers, where similar texts have similar vectors. This allows us to perform semantic similarity searches.

By embedding the hypothetical document instead of the original query, we aim to get a vector representation that better captures the expanded search intent. Next up:

const { data: modelsData, error } = await supabase.rpc("search_models", {

query_embedding: embedding,

similarity_threshold: 0.75,

match_count: 10,

});

This part performs the actual similarity search in the model database. It calls a remote procedure (search_models) on the Supabase database, passing in the embedding of the hypothetical document as the query_embedding.

The similarity_threshold and match_count parameters control how closely the results need to match and how many to return.

The search looks for model embeddings that are most similar to the query embedding based on cosine similarity.

Now let’s look at the generateHypotheticalDocument function:

const prompt = `The user entered the following search query:

"${query}"

Please generate a detailed hypothetical document that would be highly relevant to answering this query. The document should use more technical language and expand on the key aspects of the query.

Hypothetical Document:`;

This part constructs the prompt that will be sent to the language model to generate the hypothetical document.

The prompt includes the user’s original query and instructions for the model to generate a detailed, technical document that expands on the query’s key aspects. Crafting an effective prompt is crucial for guiding the language model to generate useful hypothetical documents.

Now for the next part:

const response = await openAi.createCompletion({

model: "text-davinci-002",

prompt: prompt,

max_tokens: 200,

n: 1,

stop: null,

temperature: 0.5,

});

These lines use OpenAI’s text-davinci-002 model to generate the hypothetical document based on the prompt.

The max_tokens parameter limits the length of the generated document, while temperature controls the randomness (higher values make the output more diverse but potentially less focused). Adjusting these parameters can help tune the quality and variety of the generated documents.

Next, we have:

const hypotheticalDocument = response.data.choices[0].text.trim();

This line extracts the generated hypothetical document text from the GPT-3 API response. The generated text is then returned to be used in the embedding step.

Then we can just retreive the data as before, this time using the hypothetical document as the query, but getting a lot more relevant results due to the expanded search term and all it’s extra, juicy context!

Recap — what did we just build?

I hope the code and my explanation demonstrate how the HyDE approach can be implemented on top of an existing semantic search system. It’s not that hard! The key idea is this: By inserting the hypothetical document generation and embedding steps into the search pipeline, we aim to improve the search results by better capturing the user’s intent.

To recap, the key parts were:

Using a language model to expand the user’s query into a more detailed, technical hypothetical document

Embedding the hypothetical document to get a vector representation that aligns with the model database

Performing a similarity search between the hypothetical document embedding and the model embeddings to find the most relevant results

Of course, this is just a simplified implementation (simplementation?), and there are many details and optimizations to consider in a real implementation. But it illustrates the core flow and components of the HyDE approach.

Taking it further

We can build on our simple foundations to take our solution even further! Photo by Volodymyr Hryshchenko on Unsplash

Since this is just an initial prototype and there’s still a lot of room for experimentation and refinement. Some of the key open questions I’m thinking about:

What’s the optimal prompt structure and output length for generating useful hypothetical documents? I’d like to try different prompt templates and output sizes to see how they impact result quality.

How can the hypothetical documents be made more interpretable and transparent to users? It could be confusing if users don’t understand why certain terms are being added. I’m considering showing parts of the generated text as a “query interpretation” to provide more insight into the process.

To what extent does the result quality depend on the specific LLM and embedding models used? I’m curious to benchmark different model combinations (e.g. GPT-4 vs GPT-3, Contriever vs OpenAI embeddings, etc.) to find the best recipe.

How can this approach be efficiently scaled to work over millions of database entries? Inference on large LLMs like GPT-3 is expensive and slow. I’ll need to look into caching, optimizing, or distilling the models to make this viable at scale.

I’m excited about the potential for language models to help make search interfaces like AIModels more intuitive and powerful for a wider audience. Bridging the semantic gap between how users naturally express their needs and how AI models are technically described feels like an important piece of the puzzle. Techniques like HyDE point a way toward search experiences that deeply understand intent and meet users where they are.

My long-term goal is to keep refining this interface until anyone can easily find relevant AI models for their needs, no matter their level of technical expertise. Whether you want to “turn your profile picture into a Studio Ghibli illustration”, “generate 3D models from images”, “create text-to-speech for a virtual assistant”, or “find the best logo design AI”, I want AIModels to be a powerful starting point.

I’ll be sharing more details as I continue experimenting with HyDE and other approaches. If you’re curious to learn more about the technical side, I recommend checking out the original paper. And if you have ideas or feedback on the AIModels search experience, I’d love to hear from you! Let me know what you think in the comments.

AIModels.fyi is a reader-supported publication. To receive new posts and support my work subscribe and be sure to follow me on Twitter!