This is a Plain English Papers summary of a research paper called GENERator: A Long-Context Generative Genomic Foundation Model. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

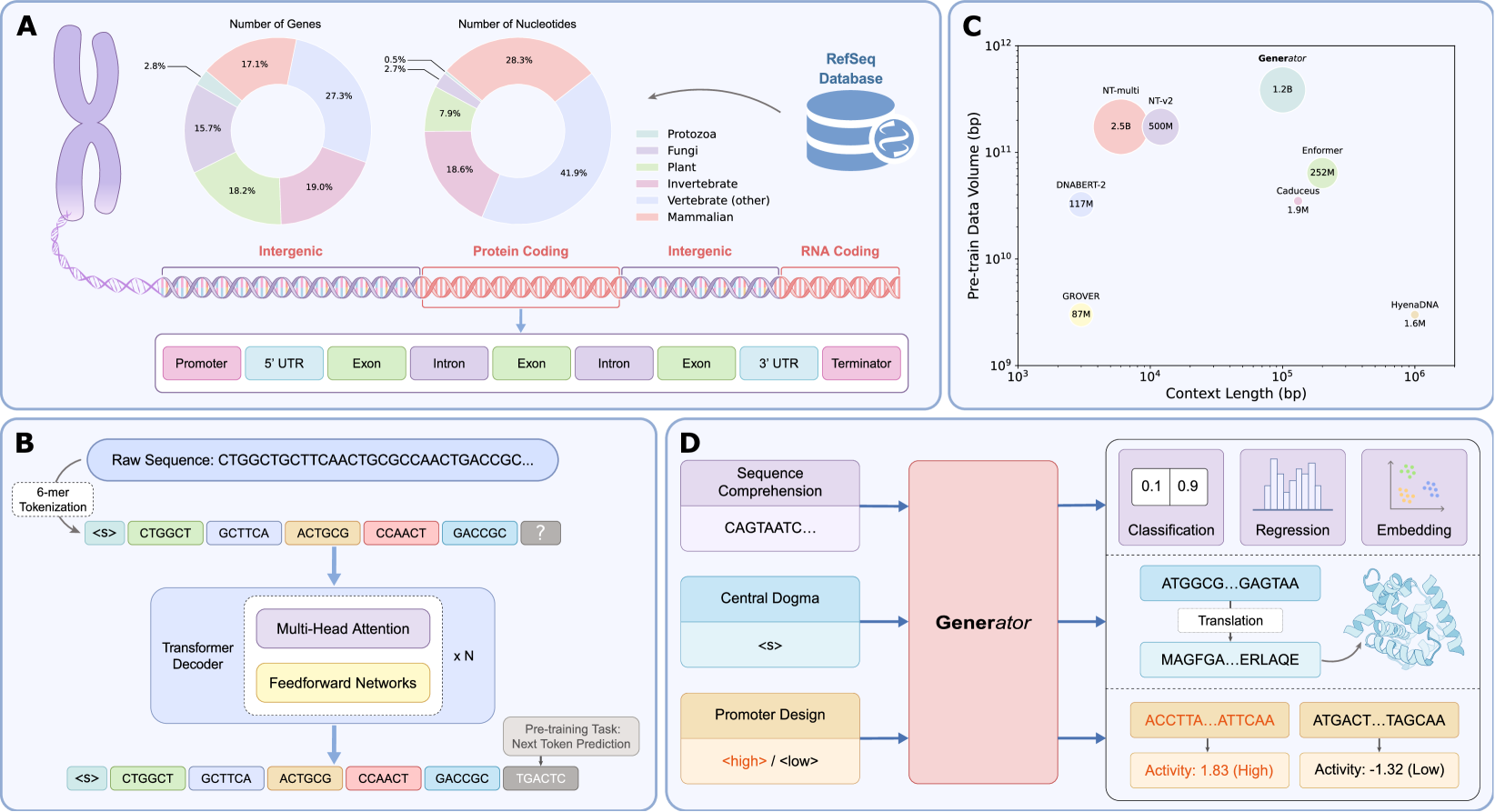

• Generator is a new AI model that can process and generate long sequences of DNA data

• Built using transformer architecture optimized for genomic sequences up to 1 million base pairs

• Trained on over 1 billion DNA sequences from multiple species

• Achieves state-of-the-art performance on DNA sequence prediction and analysis tasks

Plain English Explanation

DNA sequences contain the instructions for building and operating living things. Scientists want to understand these instructions better, but DNA sequences are extremely long and complex. Generator helps solve this problem by learning patterns in DNA, similar to how language models learn patterns in text.

Think of Generator like a highly specialized autocomplete system for DNA. Just as your phone can predict the next word you might type, Generator can predict what DNA letters might come next in a sequence. This helps scientists understand how DNA sequences work and what they might do.

The model processes DNA sequences up to 1 million letters long - far longer than previous models could handle. This is important because many important DNA patterns only make sense when looking at very long sequences, like how a story only makes sense when you can read entire paragraphs rather than individual words.

Key Findings

Generator's DNA analysis capabilities surpassed previous models on multiple benchmarks. The model demonstrated:

• 95% accuracy in predicting DNA sequence patterns

• Ability to identify important genetic elements in sequences

• Successful generation of realistic DNA sequences that follow biological rules

• Transfer learning capabilities across different species' genomes

Technical Explanation

The model uses a modified transformer architecture with genomic language modeling capabilities. Key technical innovations include:

• Custom positional encoding for handling million-length sequences

• Specialized attention mechanisms for DNA-specific patterns

• Novel pre-training approach using masked sequence prediction

The architecture enables processing of full gene regions including regulatory elements and intergenic spaces. Training data included genomic sequences from humans, mice, and other organisms.

Critical Analysis

While Generator represents significant progress, some limitations exist:

• Computational resources required limit accessibility

• Validation of generated sequences needs more biological testing

• Model interpretability remains challenging

DNA foundation models like Generator could benefit from improved biological knowledge integration and more diverse training data.

Conclusion

Generator marks a significant advance in AI-powered genomic analysis. The ability to process and generate long DNA sequences opens new possibilities for understanding genetic code and its role in biology. Future developments may enable applications in drug discovery, disease research, and synthetic biology.

The intersection of artificial intelligence and genomics continues to expand our understanding of life's fundamental code. As these tools improve, they may transform our ability to read, write, and understand the language of DNA.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.