Beyond Limited Data: Self-play LLM Theorem Provers with Iterative Conjecturing and Proving

This is a Plain English Papers summary of a research paper called Beyond Limited Data: Self-play LLM Theorem Provers with Iterative Conjecturing and Proving. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

• Introduces iterative self-play approach for LLM theorem provers to generate their own training data

• Combines automated conjecturing and proving to expand beyond limited formal mathematics datasets

• Achieves significant improvements in theorem proving capabilities through recursive self-improvement

• Demonstrates effectiveness on complex mathematical problems without human supervision

Plain English Explanation

Think of automated theorem proving like teaching a computer to solve complex math puzzles on its own. The current challenge is that these systems need lots of examples to learn from, but there aren't many available. This research introduces a clever solution - letting the AI create and solve its own math problems.

The system works like a student who practices by making up questions and then trying to answer them. As it does this repeatedly, it gets better at both creating meaningful problems and solving them correctly. This self-improvement cycle helps the AI learn more effectively than if it only studied existing problems.

Mathematical reasoning develops through this back-and-forth between making guesses about what might be true and then proving whether those guesses are correct. The researchers built their system to mirror this natural learning process.

Key Findings

The self-play approach led to major improvements in the AI's ability to prove theorems. Key results include:

• The system generated high-quality training data without human input

• Performance improved significantly through iterative self-improvement cycles

• The AI learned to create increasingly complex and meaningful conjectures

• Theorem proving capabilities matched or exceeded previous methods that relied on human-created datasets

Technical Explanation

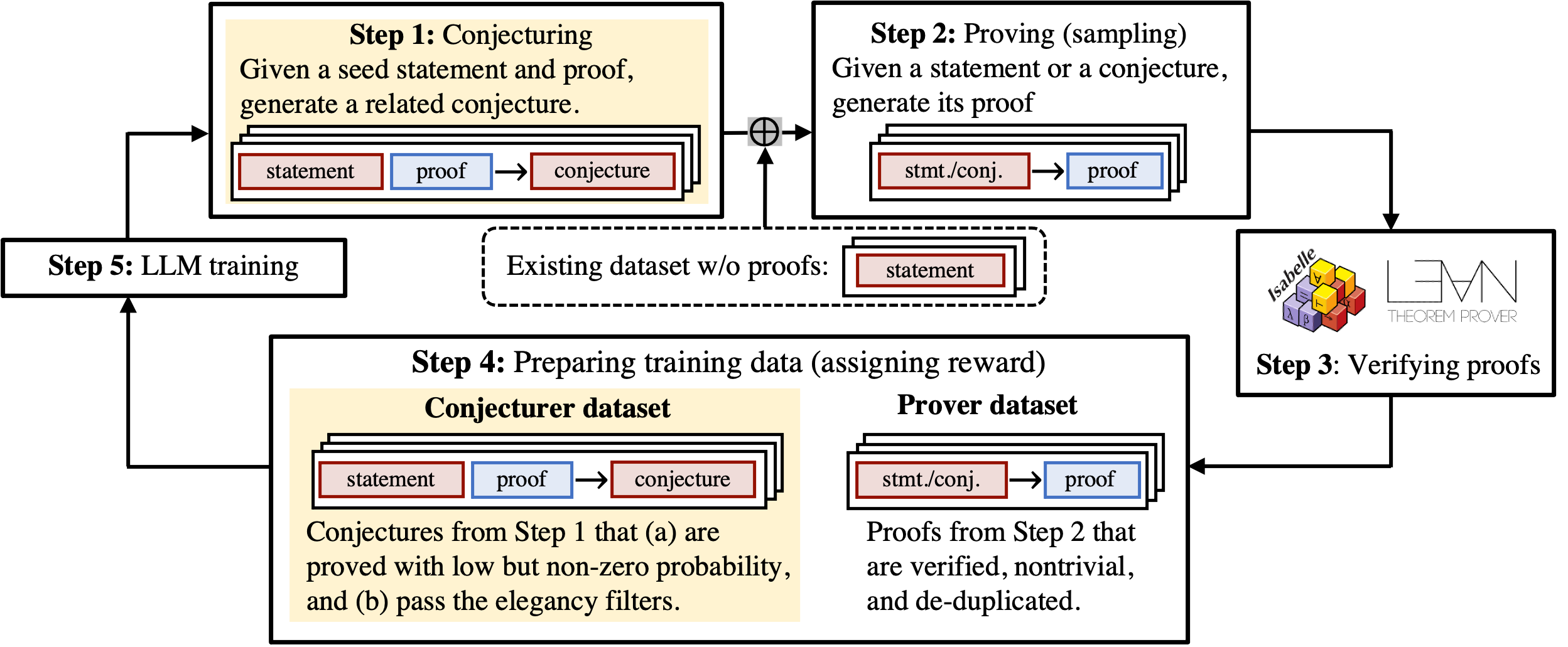

The research implements a dual-role framework where the AI alternates between generating conjectures and attempting to prove them. This creates a feedback loop where successful proofs inform better conjecture generation.

The system uses a large language model as its foundation, fine-tuned through the self-play process. It employs verification mechanisms to ensure generated conjectures are both novel and provable.

Automated reasoning capabilities develop through repeated cycles of conjecture generation, proof attempts, and model updates. The process creates a growing dataset of proven theorems that further enhance the system's abilities.

Critical Analysis

While promising, several limitations exist:

• The system may sometimes generate trivial or redundant conjectures

• Verification of proof correctness remains challenging

• The computational resources required for iterative self-improvement are substantial

Future work could focus on improving the quality control of generated conjectures and developing more efficient training methods. The approach might also benefit from incorporating human feedback at strategic points.

Conclusion

This research represents a significant step toward more autonomous and capable AI theorem provers. By enabling systems to generate their own training data through self-play, it addresses a fundamental limitation in the field.

The success of this approach suggests similar self-improvement techniques might be valuable in other areas of AI where training data is scarce. As these systems continue to develop, they could become powerful tools for advancing mathematical knowledge and understanding.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.