DC-VSR: Spatially and Temporally Consistent Video Super-Resolution with Video Diffusion Prior

This is a Plain English Papers summary of a research paper called DC-VSR: Spatially and Temporally Consistent Video Super-Resolution with Video Diffusion Prior. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

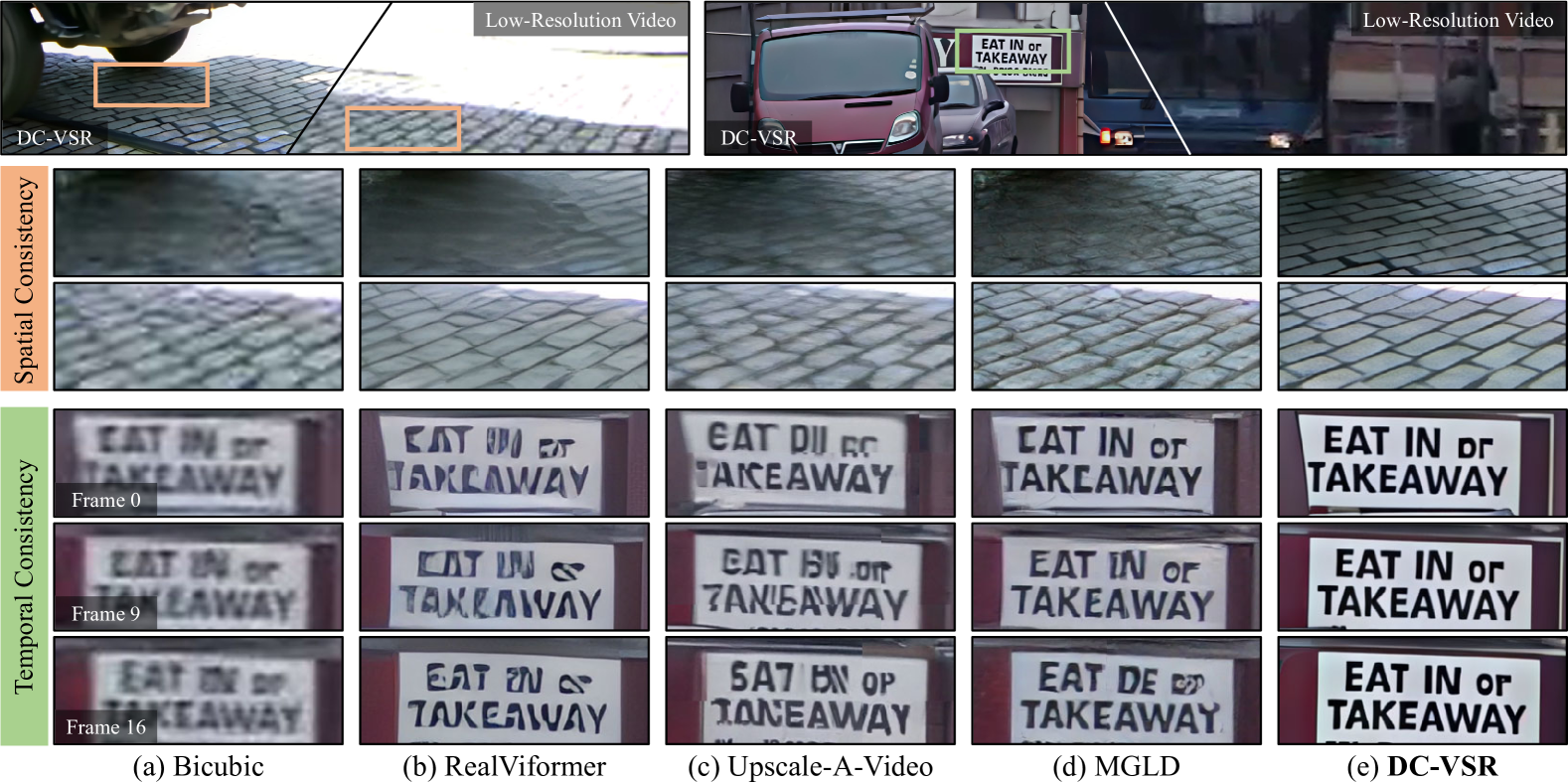

- New method called DC-VSR enhances low-resolution videos using AI diffusion models

- Creates high-quality video upscaling with consistent motion and details

- Combines video diffusion models with super-resolution techniques

- Achieves state-of-the-art results on standard video enhancement benchmarks

- Preserves temporal consistency across video frames

Plain English Explanation

Video enhancement is like turning a blurry YouTube video into crystal clear footage. DC-VSR is a new AI tool that makes low-quality videos look better while keeping natural motion. Think of it like a smart filter that knows how things should move and look in real life.

The system works in two main steps. First, it uses AI to understand what the video should look like in high quality. Then, it carefully enhances each frame while making sure moving objects stay smooth and natural. It's similar to how a restoration expert might carefully clean and sharpen an old film, but done automatically by AI.

Video super-resolution has been challenging because previous methods often made videos look artificial or jittery. DC-VSR solves this by using special AI models trained on lots of high-quality videos to know what good motion looks like.

Key Findings

The research shows DC-VSR outperforms existing methods in several ways:

- Creates sharper, more detailed videos compared to previous techniques

- Maintains consistent motion without artifacts or flickering

- Works well on different types of videos and movements

- Processes videos faster than similar AI-based approaches

- Enhances perceptual quality significantly in real-world scenarios

Technical Explanation

DC-VSR builds on recent advances in diffusion models and video enhancement. The system uses a two-stage approach: first applying a video diffusion prior to understand temporal relationships, then using this knowledge for super-resolution.

The architecture incorporates motion-guided processing to maintain consistency between frames. It uses a novel loss function that balances detail preservation with temporal smoothness.

The method processes videos in sliding windows to handle long sequences efficiently while maintaining consistent quality throughout the footage.

Critical Analysis

While DC-VSR shows impressive results, some limitations exist:

- High computational requirements for processing long videos

- Occasional artifacts in scenes with extreme motion

- Limited testing on diverse video types and conditions

- Space-time consistency could be improved further

More research is needed to reduce processing requirements and handle challenging scenarios like rapid camera movements or complex textures.

Conclusion

DC-VSR represents a significant step forward in video enhancement technology. Its ability to maintain temporal consistency while improving resolution could impact various fields from media restoration to medical imaging. The research opens new paths for combining diffusion models with video processing tasks.

The success of this approach suggests similar techniques could be applied to other video enhancement challenges like stabilization or frame interpolation. As computational efficiency improves, these methods could become standard tools for video processing.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.